I've off and on been trying to find ways to create computational models of political events in order to predict their outcomes. I've started with an intuitive sense that events evolve in a structured way, and if we can predict the probability of the smaller steps in that evolution, we can predict the total outcome of the event.

For example, consider the question of whether Afghanistan will approve the bilateral security agreement offered by the United States. The Loya Jirga (assembly of elders) has just approved the agreement, and urged Karzai to sign it before the end of the year. In order for Karzai to sign it, the following internal events must occur:

E1: The Afghan National Assembly must approve the BSA. This will no doubt be influenced by the fact that the Loya Jirga has already approved it, since the Afghan constitution declares the Loya Jirga to be the highest expression of the will of the Afghan people. However, there is some question of whether the Loya Jirga was properly assembled according to the constitution, and some feeling that Karzai convened the Loya Jirga because he thought he could influence its decision more than he could influence the National Assembly directly. Let's say the odds of this are something like 0.9.

E2: Karzai must choose to sign the BSA before the end of the year. Currently he is calling for it to be signed next year.

Intuitively, the outcome of the total event should be something like:

PE1 * PE2

Where

PE is the probability that event

E will occur.

I do not know specifically why Karzai is holding out on signing the BSA this year. In the absence of knowledge, it is tempting to assign a 0.5 probability to

PE2, giving final odds of 0.9 * 0.5 = 0.45. However, my recent experience is showing that there is another way to look at these questions.

In the most general description, event

E2 is an irreversible state change. Once Karzai chooses to approve the BSA, he cannot (in a practical sense) reverse the approval and return to his original state. He could make his decision on any day, so

PE2 should really decay towards zero according to a formula like the following:

PE2 = 1 - (1 - pE2)d

Where

pE2 represents the odds on any given day that Karzai will change his mind, and

d is the number of days remaining before the end of the year.

If delaying the BSA is good in itself for Karzai, then he will never change his mind, so we could say that

pE2 is zero and so likewise is

PE2. That might be the case if Karzai believes he could be charged with treason, assassinated, or otherwise subject to persecution/prosecution for signing the BSA.

On the other hand, Karzai may be threatening to delay signature of the BSA in order to extract some concession from the United States. In that case, for him to fail to sign the BSA by the end of the year signifies the failure of his gambit. The odds of his signature in that case are calculated very differently, so we should probably think about this as two separate events:

E2a: Karzai signs the BSA even though he believes he could be persecuted as a result.

E2b: Karzai signs the BSA after receiving a concession from the United States.

In order to calculate E2 from these two values, we need to decide the odds that either or both are true. Let's say there is are 0.1 odds that Karzai fears serious persecution and 0.9 odds that he is trying to wring out a concession:

PE2 = 0.1 PE2a + 0.9 PE2b

As the end of the year approaches, the value of Karzai's signature drops, so the value of what he expects in return should decrease. Meanwhile, the value of what the US offers should gradually increase until it meets the value of what Karzai has to offer. If both players know each other well, then they have already calculated that they will reach an agreement before the end of the year, and the only question is whether they are wrong. In that case,

PE2b should depend on the odds that the two players have correctly estimated each other's thresholds for negotiation.

PE2b = 1 - (1 - CE2b)d

Where

CE2b represents the odds that the two parties have correctly assessed each other's positions and can reach an agreement on any given day.

So, if we estimate 0.9 odds that the National Assembly will concur with the decision of the Loya Jirga, and 0.8 odds that the two players have correctly estimated each other's positions, then the total likelihood of a timely signature is:

PEtotal = PE1 * (0.1 PE2a + 0.9 PE2b)

PEtotal = 0.9 * (0.1 * 0.0 + 0.9 * (1 - (1 - 0.8))d)

Under that model, the odds of Karzai signing the BSA hover at about 0.81 till the last three days of December, when they suddenly plummet to zero.

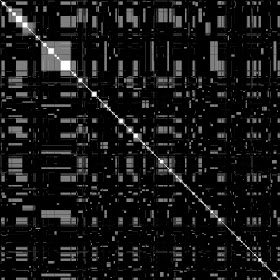

One of the things that bedevils me here, though, is the unknown intermediate steps. If I have time, I think I would like to see what kind of behavior emerges if I simulate situations where there are thousands of dependent steps and thousands of possible routes to a particular outcome. Do complex networks of interrelated events conform to a different set of rules en masse?

(Note added 12/17/2013: The Karzai example here does not work if, for example Karzai is engaged in negotiations with Iran. The reason is that the settled state of negotiations with the US gives Karzai the opportunity to develop other options and choose between them. So maybe we should expect that a less-than-satisfactory settled negotiation will usually stall while other options are developed.)