In my last post, I made a proposal about vowels, and inadvertently revealed that I am not a real Voynichologist, because I referred to [p,f,t,k] as "tall, loopy letters" instead of using their conventional name: gallows letters.

First, consider the distribution of the gallows letters within words. The following graph shows the relative frequency of gallows letters compared to other letters in the first ten letters of Voynich words:

Gallows letters are heavily represented at the beginnings of words, and scarcely represented later in words. If they represent vowels, then what does this imply? Perhaps the language has syllable stress, and the stress normally falls on the first syllable of a word, and only the stressed vowel is written.

That could not be the whole picture, however. In English, a language with a bias towards stressing the first syllable, the relative frequency of the vowels compared to other phonemes in the first ten phonemes looks like this (data from the Carnegie Mellon pronunciation database):

As you can see, stressed vowels are slightly over parity with other phonemes as the second phoneme of a word in English. To explain the gallows letters as stressed vowels, we would additionally have to assume some particular stressed vowel or vowels in the first syllable if no other vowel is written, and this would have to apply to about 4/5 words that are stressed on the first syllable.

Better Reading

▼

Pages

▼

Tuesday, September 24, 2013

Monday, September 23, 2013

I couldn't resist: The Voynich Vowels

OK, I didn't mean to get started down this route because I'm a little overworked, but I ran my contextual distance analysis on the Voynich graphemes (in EVA transcription). Based on that, here is my best guess at the Voynich vowels.

The following image shows a map of the similarity of the graphemes in the Voynich Manuscript. Like the King James genesis, we have a small island of five graphemes which occur with the word boundary (#). I will call these the *vowels (note the asterisk, which is my caveat that this is all hypothetical).

The *vowels, in EVA transcription, are the letters z, t, k, f and p. The Voynich graphemes represented here are:

There is an obvious graphemic similarity here: The tall, loopy letters are all *vowels, as is the z, which might be called a small, loopy letter.

Interestingly, the closest contextual similarities here are between the graphemes that look the most similar:

sim(t, k) = 0.88

sim(f, p) = 0.87

By contrast, the strongest similarities between any two vowels in Latin is sim(e, i) = 0.77, while in English it is sim(a, o) = 0.59.

If the *vowels are indeed the Voynich vowels, then I propose that we are looking at a script with only three vowels, and the EVA [t,k] and [f,p] are merely graphemic variants of each other.

Is it possible that the underlying orthography is based on something like the way Hebrew is used to write Yiddish, using the [א,ו,י] to represent vowels?

In fact, I'll go a step further, just so I can claim credit if this turns out to be true: Given my earlier feeling that this is a polyglot or macaronic text, is it possible that the underlying text is in Hebrew and Yiddish (or another language of the Jewish diaspora, like Ladino)?

The following image shows a map of the similarity of the graphemes in the Voynich Manuscript. Like the King James genesis, we have a small island of five graphemes which occur with the word boundary (#). I will call these the *vowels (note the asterisk, which is my caveat that this is all hypothetical).

The *vowels, in EVA transcription, are the letters z, t, k, f and p. The Voynich graphemes represented here are:

There is an obvious graphemic similarity here: The tall, loopy letters are all *vowels, as is the z, which might be called a small, loopy letter.

Interestingly, the closest contextual similarities here are between the graphemes that look the most similar:

sim(t, k) = 0.88

sim(f, p) = 0.87

By contrast, the strongest similarities between any two vowels in Latin is sim(e, i) = 0.77, while in English it is sim(a, o) = 0.59.

If the *vowels are indeed the Voynich vowels, then I propose that we are looking at a script with only three vowels, and the EVA [t,k] and [f,p] are merely graphemic variants of each other.

Is it possible that the underlying orthography is based on something like the way Hebrew is used to write Yiddish, using the [א,ו,י] to represent vowels?

In fact, I'll go a step further, just so I can claim credit if this turns out to be true: Given my earlier feeling that this is a polyglot or macaronic text, is it possible that the underlying text is in Hebrew and Yiddish (or another language of the Jewish diaspora, like Ladino)?

How would you use semantic similarities for decipherment?

A couple weeks ago, I started taking an online course in cryptography taught by Dan Boneh from Stanford on Coursera. (It's excellent, by the way.) I've been a little slow to post anything new, but I wanted to incompletely finish a thought.

Why should we care about mapping semantic relationships in a text? Well, I think it could be a valuable tool for deciphering an unknown language.

First, what I've been calling "semantic similarity" is really contextual similarity, but it happens to coincide with semantic similarity in content words (content-bearing nouns, verbs, adjectives, adverbs). In function words, it's really functional similarity. In phonemes (which I don't think I have mentioned before) it correlates with phonemic similarity.

Here's an example of a map generated from the alphabetic letters in the book of Genesis in King James English:

The most obvious feature of this map is that all of the vowels (and the word-boundary #) are off on their own, separated from all of the consonants. If we had an alphabetic text in an unknown script in which vowels and consonants were written, we could probably identify the vowels.

There are finer distinctions that I may get into one day when I have more time, but the main point here is that contextual similarities of this type measure something valuable from a decipherment perspective. I believe it should be possible to get the general shape of the phonology of an alphabetic writing system, to separate out prepositions or postpositions (if they exist), and to eventually distinguish parts of speech.

Why should we care about mapping semantic relationships in a text? Well, I think it could be a valuable tool for deciphering an unknown language.

First, what I've been calling "semantic similarity" is really contextual similarity, but it happens to coincide with semantic similarity in content words (content-bearing nouns, verbs, adjectives, adverbs). In function words, it's really functional similarity. In phonemes (which I don't think I have mentioned before) it correlates with phonemic similarity.

Here's an example of a map generated from the alphabetic letters in the book of Genesis in King James English:

The most obvious feature of this map is that all of the vowels (and the word-boundary #) are off on their own, separated from all of the consonants. If we had an alphabetic text in an unknown script in which vowels and consonants were written, we could probably identify the vowels.

There are finer distinctions that I may get into one day when I have more time, but the main point here is that contextual similarities of this type measure something valuable from a decipherment perspective. I believe it should be possible to get the general shape of the phonology of an alphabetic writing system, to separate out prepositions or postpositions (if they exist), and to eventually distinguish parts of speech.

Friday, September 13, 2013

Visualizing the semantic relationships within a text

In the last few posts, I had some images that were supposed to give a rough idea of the semantic relationships within a text. Over the last few days I have been rewriting my lexical analysis tools in C# (the old ones were in C++ and Tcl), and that has given me a chance to play with some new ways of looking at the data.

When I depict this data in two dimensions, I'm really showing something like the shadow of a multi-dimensional object, and I have to catch that object at the right angle to get a meaningful shadow. In the following images, I have imagined the tokens in the text as automata that are bound by certain rules, but otherwise behaving randomly, in an attempt to produce an organic image of the data.

For example, each point of this image represents a word in the lexicon of the King James version of the book of Genesis. The words all started at random positions in space, but were made to iteratively organize themselves so that their physical distances from other words roughly reflected the semantic distance between them. After running for an hour, the process produced the following image:

There are clearly some groups forming within the universe of words. Since this process is iterative, the longer it runs the more ordered the data should become (up to a point)...but I haven't had the patience to run it for more than an hour.

The same process applied to the Voynich manuscript produces something smoother, as you can see below. However, the VM has a much broader vocabulary, so I estimate I would need about nine hours of processing to arrive at the same degree of evolution as I have in the image above.

Reflecting on that, I thought I would try a new algorithm where words are attracted to each other in proportion to their similarity. The result was not interesting enough to show here, since the words rapidly collapsed into a small number of points. I am certain that this data would be interesting to analyze, but it is not interesting to look at.

Thinking about the fractal nature of this collapse, I thought I would use similarity data to model something like the growth of a plant. In the following image, I have taken the lexicon of the King James Genesis again and used it to grow a vine. I started with one arbitrarily chosen token, then added the others by joining them to whatever existing point was semantically nearest. Each time, I tried up to ten times to find a random angle of growth that did not overlap another existing line, before finally allowing one line to overlap others.

I am quite pleased with the result here, since it shows what I have always intuitively felt about the data--that there are semantic clusters of words in the lexicon.

The same approach applied to the Voynich manuscript produces a denser image, due to the greater breadth of the vocabulary:

But how does this compare to random data? To answer this question, I processed a random permutation of the Voynich manuscript, so the text would have the same word frequency and lexicon as the Voynich manuscript, but any semantic context would be destroyed. Here is the result:

Intuitively, I feel that the random data is spread more evenly than the Voynich data, but to get beyond intuition I need a metric I can use to measure it.

Good night.

When I depict this data in two dimensions, I'm really showing something like the shadow of a multi-dimensional object, and I have to catch that object at the right angle to get a meaningful shadow. In the following images, I have imagined the tokens in the text as automata that are bound by certain rules, but otherwise behaving randomly, in an attempt to produce an organic image of the data.

For example, each point of this image represents a word in the lexicon of the King James version of the book of Genesis. The words all started at random positions in space, but were made to iteratively organize themselves so that their physical distances from other words roughly reflected the semantic distance between them. After running for an hour, the process produced the following image:

There are clearly some groups forming within the universe of words. Since this process is iterative, the longer it runs the more ordered the data should become (up to a point)...but I haven't had the patience to run it for more than an hour.

The same process applied to the Voynich manuscript produces something smoother, as you can see below. However, the VM has a much broader vocabulary, so I estimate I would need about nine hours of processing to arrive at the same degree of evolution as I have in the image above.

Reflecting on that, I thought I would try a new algorithm where words are attracted to each other in proportion to their similarity. The result was not interesting enough to show here, since the words rapidly collapsed into a small number of points. I am certain that this data would be interesting to analyze, but it is not interesting to look at.

Thinking about the fractal nature of this collapse, I thought I would use similarity data to model something like the growth of a plant. In the following image, I have taken the lexicon of the King James Genesis again and used it to grow a vine. I started with one arbitrarily chosen token, then added the others by joining them to whatever existing point was semantically nearest. Each time, I tried up to ten times to find a random angle of growth that did not overlap another existing line, before finally allowing one line to overlap others.

I am quite pleased with the result here, since it shows what I have always intuitively felt about the data--that there are semantic clusters of words in the lexicon.

The same approach applied to the Voynich manuscript produces a denser image, due to the greater breadth of the vocabulary:

But how does this compare to random data? To answer this question, I processed a random permutation of the Voynich manuscript, so the text would have the same word frequency and lexicon as the Voynich manuscript, but any semantic context would be destroyed. Here is the result:

Intuitively, I feel that the random data is spread more evenly than the Voynich data, but to get beyond intuition I need a metric I can use to measure it.

Good night.

Monday, September 9, 2013

Problem with the problem with the Voynich Manuscript

There was a problem with the algorithm that produced the images I posted last night. (These things happen when you are tired.) The images for the English, Vulgar Latin and Wampanoag texts were basically correct, but the image for the Voynich Manuscript had a problem.

Part of the algorithm that produces the images is supposed to sort the lexicon in a way that will group similar words together, creating the islands and bands of brightness that the images show. However, the sorting algorithm did not adequately handle the case where there were subsets of the lexicon with absolutely no similarity to each other. As a result, the Voynich image only shows one corner of the full lexicon (about 10%).

The full image is much darker, like a starry sky, implying a text that is less meaningful. That lends a little weight to the theory that the text may be (as Gordon Rugg has suggested) a meaningless hoax. However, I thought I should also test the possibility that it could be polyglot, since my samples so far have all been monoglot. With a polyglot text, my sorting algorithm would be hard pressed to group similar words together, because the lexicon would contain subsets of unrelated words.

After fixing my sorting algorithm, I produced a similarity map from the polyglot medieval collection Carmina Burana, then compared it to a similar-sized monoglot English text, and a similar-sized piece of the Voynich Manuscript. Here are the results.

First, the English text:

And now, the Carmina Burana. The image is actually much larger, because the lexicon is larger. Here, you can see the "starry sky" effect.

And last, the Voynich Manuscript. Again, the "starry sky" effect.

Of the images I have produced so far, the Voynich Manuscript looks most like the Carmina Burana. However, I have the following caveats:

Part of the algorithm that produces the images is supposed to sort the lexicon in a way that will group similar words together, creating the islands and bands of brightness that the images show. However, the sorting algorithm did not adequately handle the case where there were subsets of the lexicon with absolutely no similarity to each other. As a result, the Voynich image only shows one corner of the full lexicon (about 10%).

The full image is much darker, like a starry sky, implying a text that is less meaningful. That lends a little weight to the theory that the text may be (as Gordon Rugg has suggested) a meaningless hoax. However, I thought I should also test the possibility that it could be polyglot, since my samples so far have all been monoglot. With a polyglot text, my sorting algorithm would be hard pressed to group similar words together, because the lexicon would contain subsets of unrelated words.

After fixing my sorting algorithm, I produced a similarity map from the polyglot medieval collection Carmina Burana, then compared it to a similar-sized monoglot English text, and a similar-sized piece of the Voynich Manuscript. Here are the results.

First, the English text:

And now, the Carmina Burana. The image is actually much larger, because the lexicon is larger. Here, you can see the "starry sky" effect.

And last, the Voynich Manuscript. Again, the "starry sky" effect.

Of the images I have produced so far, the Voynich Manuscript looks most like the Carmina Burana. However, I have the following caveats:

- Carmina Burana is much smaller than the VM. To do a better comparison, I will need a medieval polyglot/macaronic text around 250 kb in size. So far I have not found one.

- I should attempt to produce the same type of text using Cardan grilles, to test Gordon Rugg's hypothesis.

Lastly, I want to add a note about the narrow circumstances under which I think Gordon Rugg may be right, but generally why I don't think his theory will turn out to be correct.

I don't think the VM was generated using Cardan grilles because the work required to generate a document the size of the VM would be significant, and there would be an easier way to do it. It would be much easier to invent a secret alphabet and simply babble along in a vernacular language. The Rugg hypothesis needs to address the question of why the extra effort would have been worth it to the con artist that generated the work.

The narrow circumstance under which this would make sense, to me, is if the VM were generated by someone using something like Cardan grilles as an oracular device, believing that he was thereby receiving a divine message.

However, if the VM is a hoax, I think we will one day decipher it, and we will find that it says something like "the king is a sucker. I can't wait till I'm done with this thing. I'm so sick of vellum...."

Good night.

Saturday, September 7, 2013

Meaning in the Voynich Manuscript

It turns out there is an established algorithm for measuring semantic distance between words in a text that is somewhat similar to the algorithm I have developed. It is called Second-Order Co-occurrence Pointwise Mutual Information, and there is a paper about it on the University of Ottowa website.

However, my algorithm is a little bit simpler than SOC-PMI, and it treats context words differently based on whether they occur before or after the subject word. I like mine better.

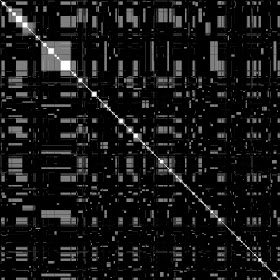

Using my algorithm (which I may someday put online, if I ever have time to tidy up the code), I have generated a series of images. These images show the semantic relationship between words in the Voynich manuscript and several other texts that are approximately the size of the Voynich manuscript. In these images, each point along the x and y axes represents a word in the lexicon of the text, and the brightness at any point (x, y) represents the semantic similarity between the words x and y. There is a bright line at x = y because words are completely similar to themselves, and clusters of brightness represent clusters of words with common meanings.

First, here is a control text created from 2000 words arranged in random order. The text is meaningless, so the image is basically blank except the line x = y.

The following image is generated from a text in Vulgar Latin. Because it is an inflected language, and my algorithm doesn't recognized inflected forms as belonging to the same word, there are many small islands of similarity.

The next image is taken from an English text. Due to the more analytic nature of English, the islands of similarity are larger.

The next image is taken from a text in Wampanoag. I expected this text to be more like the Latin text, but I think the source text is actually smaller in terms of the number of words (though similar in terms of the number of graphemes).

Now, for the cool one. This is the Voynich Manuscript.

I wonder if this shows a text written in two languages. The light gray square in the upper left corner, together with the two gray bands in the same rows and columns, represents a subset of words that exist in their own context, a context not shared with the rest of the text. One possible explanation would be that the "common" language of the text contains within it segments of text in another "minority" language.

About 25% of the lexicon of the text belongs to the minority language, and the remaining 75% belongs to the common language. The minority language doesn't contain any significant islands of brightness, so it may be noise of some kind--either something like cryptographic nulls, or perhaps something like litanies of names. If I have time, I'll try to split the manuscript into two separate texts, one in each language, and see what the analysis looks like at that point.

Good night.

However, my algorithm is a little bit simpler than SOC-PMI, and it treats context words differently based on whether they occur before or after the subject word. I like mine better.

Using my algorithm (which I may someday put online, if I ever have time to tidy up the code), I have generated a series of images. These images show the semantic relationship between words in the Voynich manuscript and several other texts that are approximately the size of the Voynich manuscript. In these images, each point along the x and y axes represents a word in the lexicon of the text, and the brightness at any point (x, y) represents the semantic similarity between the words x and y. There is a bright line at x = y because words are completely similar to themselves, and clusters of brightness represent clusters of words with common meanings.

First, here is a control text created from 2000 words arranged in random order. The text is meaningless, so the image is basically blank except the line x = y.

The following image is generated from a text in Vulgar Latin. Because it is an inflected language, and my algorithm doesn't recognized inflected forms as belonging to the same word, there are many small islands of similarity.

The next image is taken from an English text. Due to the more analytic nature of English, the islands of similarity are larger.

The next image is taken from a text in Wampanoag. I expected this text to be more like the Latin text, but I think the source text is actually smaller in terms of the number of words (though similar in terms of the number of graphemes).

Now, for the cool one. This is the Voynich Manuscript.

I wonder if this shows a text written in two languages. The light gray square in the upper left corner, together with the two gray bands in the same rows and columns, represents a subset of words that exist in their own context, a context not shared with the rest of the text. One possible explanation would be that the "common" language of the text contains within it segments of text in another "minority" language.

About 25% of the lexicon of the text belongs to the minority language, and the remaining 75% belongs to the common language. The minority language doesn't contain any significant islands of brightness, so it may be noise of some kind--either something like cryptographic nulls, or perhaps something like litanies of names. If I have time, I'll try to split the manuscript into two separate texts, one in each language, and see what the analysis looks like at that point.

Good night.

A Problem with the Voynich Manuscript

ere yamji bi ilan hūntahan sain nure be omire jakade...

There is a problem with the Voynich manuscript, and the problem is on the level of the apparent words, not the graphemes.

I mentioned in a post a while back that I have an algorithm that roughly measures the contextual similarity between words in a large text. Over the years I have gathered samples of the text of the book of Genesis because it is approximately the same size as the Voynich manuscript, and it has been translated into many languages.

Since I have recently been working on another (unrelated) cipher, I have developed a set of tools for measuring solutions, and I thought I could use them in combination with my semantic tools to look at the VM.

What I am finding right now is interesting. I'm pulling the top 14 words from sample texts and creating a 14x14 grid of similarities between them. The most frequent words tend to be more functional and less content-bearing, so I am really looking for groups like prepositions, pronouns, and so on.

In highly inflected languages, the top 14 words are not especially similar to each other. The reason for this is that prepositions in inflected languages tend to go with different cases, and inflected nouns are treated as entirely different words by my algorithm. So, for example, in Latin all of the top 14 words have a similarity score of lower than 0.2. In Wampanoag, the majority are under 0.1. In less inflected languages, like Middle English, Early Modern English, Italian and Czech, scores more commonly fall in the range 0.2-0.3, with a few in the 0.3-0.4 range.

The scores from the Voynich manuscript, however, are disconcertingly high. All of the top 14 words are very similar to each other, falling in the 0.3-0.5 range. This suggests that the most common words in the Voynich manuscript are all used in roughly similar contexts. They average just a little under the numbers that I get for a completely random text.

Something is going on here that makes the words look as though they are in random order.

There is a problem with the Voynich manuscript, and the problem is on the level of the apparent words, not the graphemes.

I mentioned in a post a while back that I have an algorithm that roughly measures the contextual similarity between words in a large text. Over the years I have gathered samples of the text of the book of Genesis because it is approximately the same size as the Voynich manuscript, and it has been translated into many languages.

Since I have recently been working on another (unrelated) cipher, I have developed a set of tools for measuring solutions, and I thought I could use them in combination with my semantic tools to look at the VM.

What I am finding right now is interesting. I'm pulling the top 14 words from sample texts and creating a 14x14 grid of similarities between them. The most frequent words tend to be more functional and less content-bearing, so I am really looking for groups like prepositions, pronouns, and so on.

In highly inflected languages, the top 14 words are not especially similar to each other. The reason for this is that prepositions in inflected languages tend to go with different cases, and inflected nouns are treated as entirely different words by my algorithm. So, for example, in Latin all of the top 14 words have a similarity score of lower than 0.2. In Wampanoag, the majority are under 0.1. In less inflected languages, like Middle English, Early Modern English, Italian and Czech, scores more commonly fall in the range 0.2-0.3, with a few in the 0.3-0.4 range.

The scores from the Voynich manuscript, however, are disconcertingly high. All of the top 14 words are very similar to each other, falling in the 0.3-0.5 range. This suggests that the most common words in the Voynich manuscript are all used in roughly similar contexts. They average just a little under the numbers that I get for a completely random text.

Something is going on here that makes the words look as though they are in random order.

Thursday, September 5, 2013

Carcharias (a cipher)

Over the last few years, I've been studying modern cipher algorithms. I've written implementations of a number of cipher algorithms as a way of understanding how they work, and I'm impressed by their economy.

But I also wonder whether there might be advantages to ciphers with huge keys. In this post, I'll describe a big, ugly cipher I'll call Carcharias, which uses massive keys and lots of memory. Since Carcharias is a big fish, I'll talk in terms of bytes instead of bits.

The Key

Carcharias is a Feistel cipher with a block size of 512 bytes and a key size of 16,777,216 bytes. The key is huge, right? But you could store hundreds of them on a modern thumb drive without any problem, and it will fit in a modern processor's memory quite easily. As with any other cipher, the ideal key is as random as possible, but the question of how to generate a good random key is outside the scope of this post.

The Round Function

Since Carcharias is a Feistel cipher, it processes the block in two halves (256 bytes) using a round function. In the following pseudocode for the round function, the key is treated as a three-dimensional array of bytes (256 x 256 x 256), and the subkey, input and output are treated as arrays of bytes. This isn't the most efficient implementation...it's just meant to be illustrative.

for (i = 0; i < 0x100; ++i) {

output[i] = 0;

for (j = 0; j < 0x100; ++j) {

output[i] ^= key[i][j][input[j] ^ subkey[j]];

}

}

Mathematically, it doesn't get much simpler than this. You've got a couple XOR operations and some pointer math, but every bit of the output is dependent on every bit of the input. You throw that into a Feistel network and you have pretty good confusion and diffusion.

Incidentally, in a few years I think processors will probably carry enough memory that you could implement a 4 GB key, treated as a four-dimensional array, in which case Carcharias could be replaced by Megalodon, using this line instead:

output[i] ^= key[i][j][input[j]][subkey[j]];

It's big. It's ugly. It's brutish.

Advantages

Provided the key is very random and the key transmission secure, I think Carcharias and Megalodon can only be attacked by brute force. The brute force attack must use a large amount of memory, which might make it harder to farm out to a bunch of processors running in parallel.

If I have time, I'll write an implementation of Carcharias and post it on github.

Note (12/2/2013): This has some potential weaknesses if the key (which is essentially a huge S-box) is too close to a linear function. Also, it's really overkill, and there is no need for something like this in the world :)

But I also wonder whether there might be advantages to ciphers with huge keys. In this post, I'll describe a big, ugly cipher I'll call Carcharias, which uses massive keys and lots of memory. Since Carcharias is a big fish, I'll talk in terms of bytes instead of bits.

The Key

Carcharias is a Feistel cipher with a block size of 512 bytes and a key size of 16,777,216 bytes. The key is huge, right? But you could store hundreds of them on a modern thumb drive without any problem, and it will fit in a modern processor's memory quite easily. As with any other cipher, the ideal key is as random as possible, but the question of how to generate a good random key is outside the scope of this post.

The Round Function

Since Carcharias is a Feistel cipher, it processes the block in two halves (256 bytes) using a round function. In the following pseudocode for the round function, the key is treated as a three-dimensional array of bytes (256 x 256 x 256), and the subkey, input and output are treated as arrays of bytes. This isn't the most efficient implementation...it's just meant to be illustrative.

for (i = 0; i < 0x100; ++i) {

output[i] = 0;

for (j = 0; j < 0x100; ++j) {

output[i] ^= key[i][j][input[j] ^ subkey[j]];

}

}

Mathematically, it doesn't get much simpler than this. You've got a couple XOR operations and some pointer math, but every bit of the output is dependent on every bit of the input. You throw that into a Feistel network and you have pretty good confusion and diffusion.

Incidentally, in a few years I think processors will probably carry enough memory that you could implement a 4 GB key, treated as a four-dimensional array, in which case Carcharias could be replaced by Megalodon, using this line instead:

output[i] ^= key[i][j][input[j]][subkey[j]];

It's big. It's ugly. It's brutish.

Advantages

Provided the key is very random and the key transmission secure, I think Carcharias and Megalodon can only be attacked by brute force. The brute force attack must use a large amount of memory, which might make it harder to farm out to a bunch of processors running in parallel.

If I have time, I'll write an implementation of Carcharias and post it on github.

Note (12/2/2013): This has some potential weaknesses if the key (which is essentially a huge S-box) is too close to a linear function. Also, it's really overkill, and there is no need for something like this in the world :)

Tuesday, September 3, 2013

Theory about pre-human language

I have a theory about the course of the evolution of human language that is too long to put into a blog post. I have given it its own page, called Pre-Human Language.

Sunday, September 1, 2013

The Ants and the Cricket

I took a shot at translating one of Aesop's fables into the Grande Ronde dialect of Chinuk Wawa, as documented in Henry Zenk's excellent dictionary. Here it is. (If any Wawa speakers happen to come across this, I am happy to hear feedback and corrections).

The original was "The Ants and the Grasshopper". I made it "The Ants and the Cricket", since a cricket is called a "useless bird" (kʰəltəs-kələkələ), and the cricket sings, while the grasshopper doesn't.

t’siqʰwaʔ pi kʰəltəs-kələkələ

The ants and the cricket

kʰul-iliʔi ixt san t’siqʰwaʔ ɬas-munk-tlay məkʰmək ikta wam-iliʔi ɬas-munk-iskam.

One day in the winter, the ants were drying food which they had gathered in the summer.

kʰəltəs-kələkələ ya-chaku miməlust-ulu.

Cricket became extremely hungry.

ya-ɬatwa kʰapa t’siqʰwaʔ ɬas-haws, ya-aləksh məkʰmək.

He went to the ants' house, and he begged for food.

uk t’siqʰwaʔ ɬas-wawa, "pus-ikta wam-iliʔi wik ma-munk-iskam məkʰmək?"

The ants said to him, "Why did you not gather food in the summer?"

kʰəltəs-kələkələ ya-wawa, "wik na-t’uʔan lili pus na-munk-iskam məkʰmək.

The cricket said, "I didn't have time to gather food.

kʰanawi wam-iliʔi na-shati shati."

The whole summer long I sang and sang."

ɬas-munk-hihi-yaka, wawa, "pus kʰanawi wam-iliʔi ma-hihi pi shati, aɬqi kʰanawi kʰul-iliʔi ma-tanis pi ulu."

They laughed at him, and said, "If you laugh and sing all summer, then you dance and hunger all winter."

The original was "The Ants and the Grasshopper". I made it "The Ants and the Cricket", since a cricket is called a "useless bird" (kʰəltəs-kələkələ), and the cricket sings, while the grasshopper doesn't.

t’siqʰwaʔ pi kʰəltəs-kələkələ

The ants and the cricket

kʰul-iliʔi ixt san t’siqʰwaʔ ɬas-munk-tlay məkʰmək ikta wam-iliʔi ɬas-munk-iskam.

One day in the winter, the ants were drying food which they had gathered in the summer.

kʰəltəs-kələkələ ya-chaku miməlust-ulu.

Cricket became extremely hungry.

ya-ɬatwa kʰapa t’siqʰwaʔ ɬas-haws, ya-aləksh məkʰmək.

He went to the ants' house, and he begged for food.

uk t’siqʰwaʔ ɬas-wawa, "pus-ikta wam-iliʔi wik ma-munk-iskam məkʰmək?"

The ants said to him, "Why did you not gather food in the summer?"

kʰəltəs-kələkələ ya-wawa, "wik na-t’uʔan lili pus na-munk-iskam məkʰmək.

The cricket said, "I didn't have time to gather food.

kʰanawi wam-iliʔi na-shati shati."

The whole summer long I sang and sang."

ɬas-munk-hihi-yaka, wawa, "pus kʰanawi wam-iliʔi ma-hihi pi shati, aɬqi kʰanawi kʰul-iliʔi ma-tanis pi ulu."

They laughed at him, and said, "If you laugh and sing all summer, then you dance and hunger all winter."